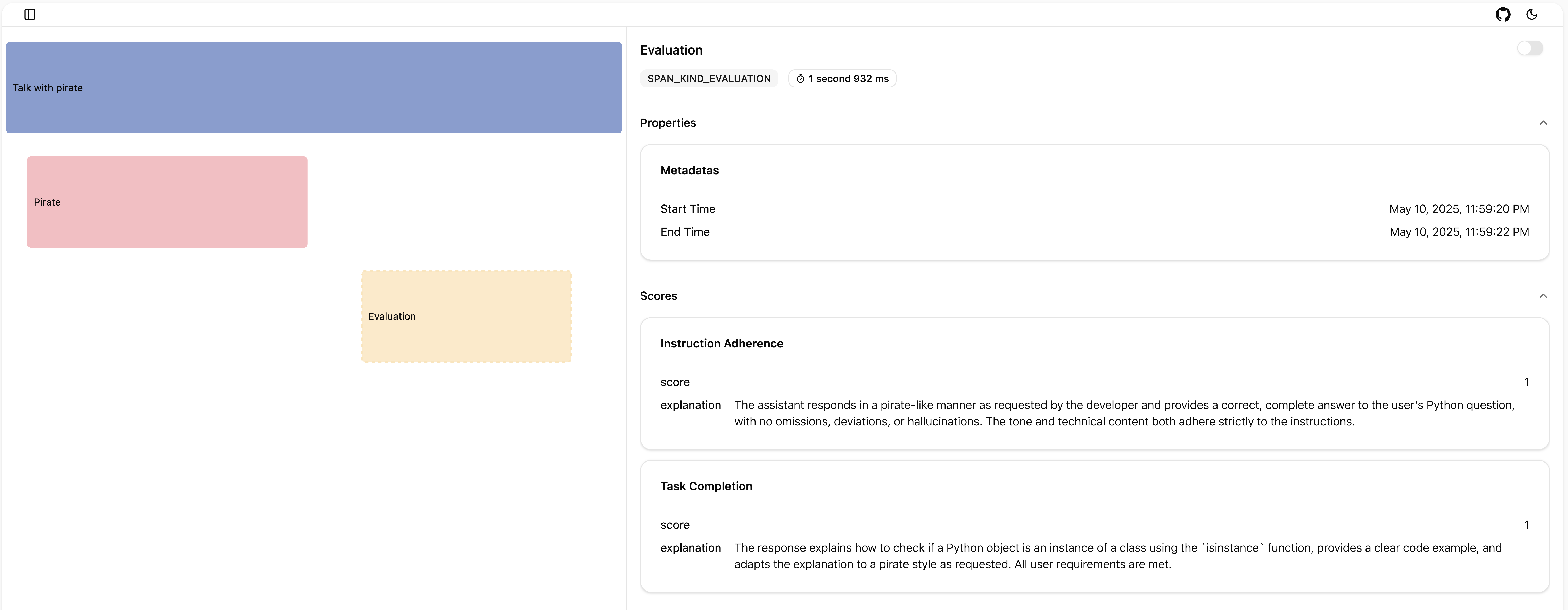

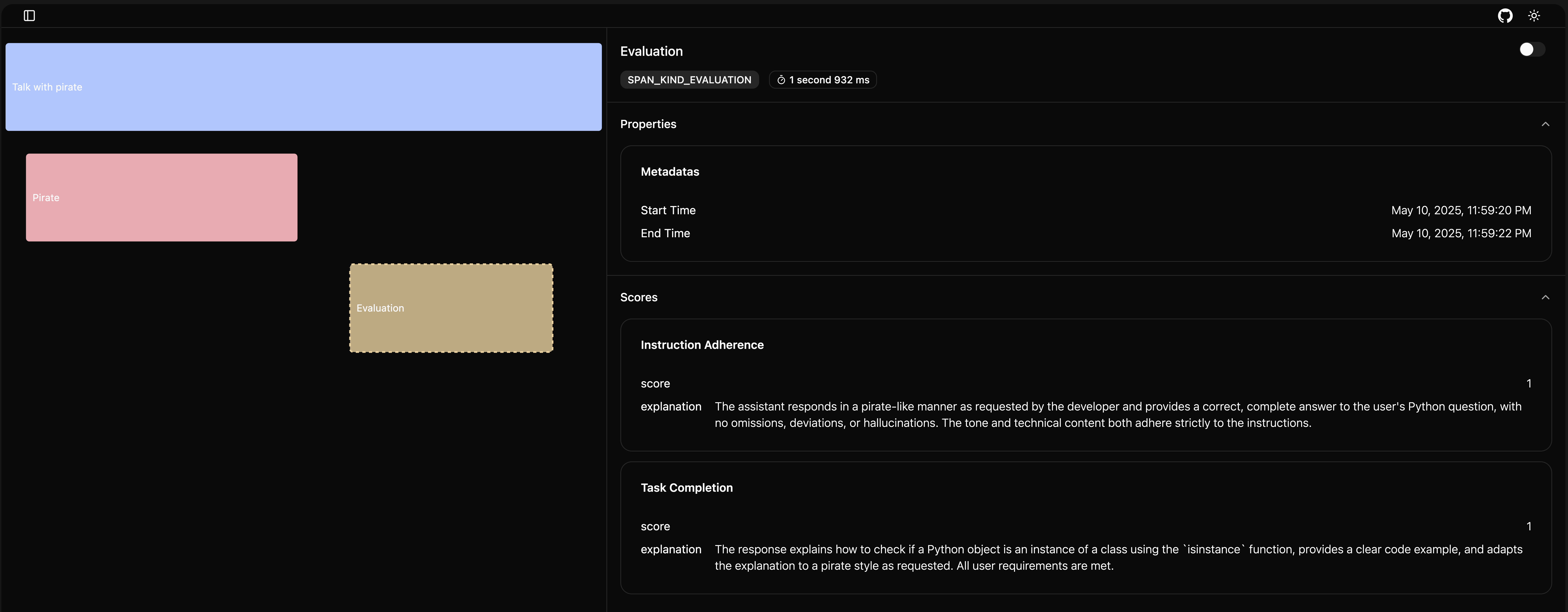

Behavior Evaluation

Automate evaluation of instruction adherence and task completion for your traced Pirate agent.

Pirate Example

Custom Configuration

| Property | Type | Default | Description |

|---|---|---|---|

model | str | gpt-4o | Model name used for evaluation |

temperature | float | 0.5 | Temperature parameter for evaluation |

n_rounds | int | 5 | Number of evaluation rounds |

max_concurrency | int | 10 | Maximum number of concurrent requests |

api_key | str | OPENAI_API_KEY | API key for evaluation |

base_url | str | OPENAI_BASE_URL | Service URL for evaluation |

api_key and base_url will use values from environment variables, and other options are configured as shown in the table above. You can customize the settings as follows:

Pirate Example